This is a brief summary of paper for me to study and arrange it, Dependency-Based Word Embeddings (Levy and Goldberg., ACL 2014) I read and studied.

Previous work on neural word embeddings take the contexts of a word to be its linear context – words that precede and follow the target word, typically in a window of k tokens to each side. In this work, we generalize the SKIPGRAM model, and move from linear bag-of-words contexts to arbitrary word contexts.

They prposed the arbitrary context for word embedding based on the syntactic relations the word participates in. In other words. They generalize SKIPGRAM by replacing the bag-of-words contexts with arbitrary contexts.

This is implemented by parsing technology that allow parsing to syntactic dependencies.

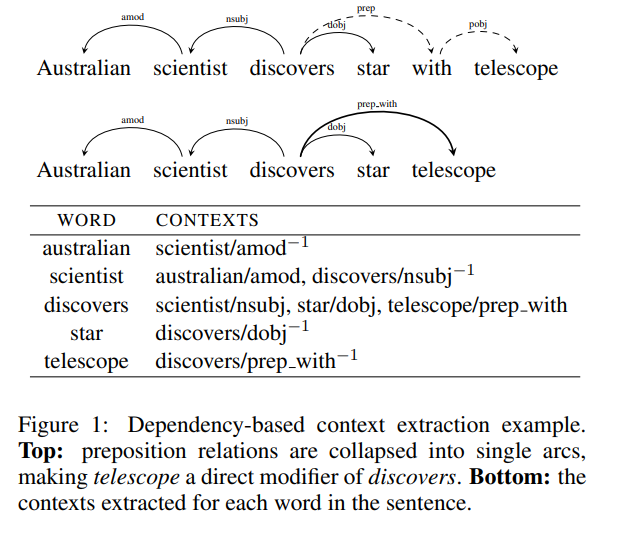

They filter out “coincidental” contexts which are within the window but not directly related to the target word (e.g. Australian is not used as the context for discovers). In addition, the contexts are typed, indicating, for example, that stars are objects of discovery and scientists are subjects.

They thus expect the syntactic contexts to yield more focused embeddings, capturing more functional and less topical similarity

The following is an example of dependency-base context extraction.

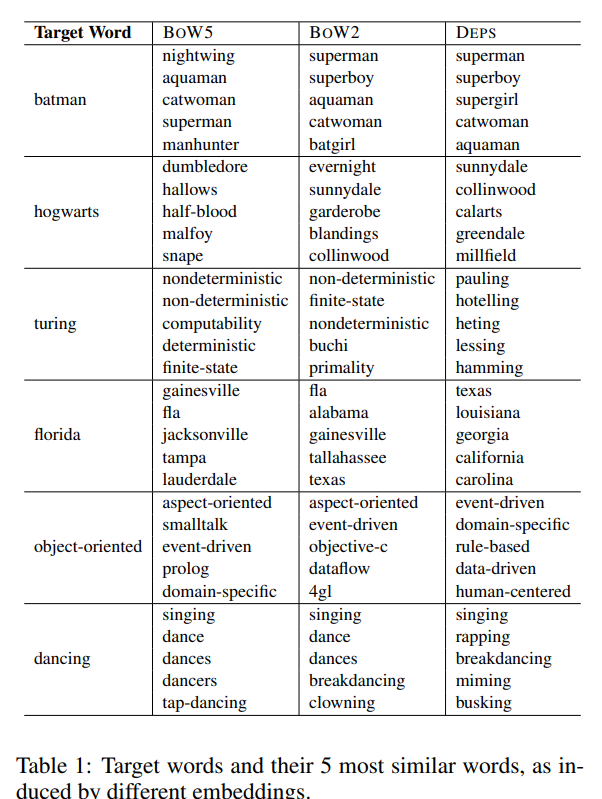

They found out that BOW find words that associate with w, while DEPS find words that behave like w and then observe that while both BOW5 and BOW2 yield topical similarities, the larger window size result in more topicality, as expected

The result shwo up on Table 1 figure below.

The neural word-embeddings are considered opaque, in the sense that it is hard to assign meanings to the dimensions of the induced representation. They show that the SKIPGRAM model does allow for some introspection by querying it for contexts that are “activated by” a target word. This allows us to peek into the learned representation and explore the contexts that are found by the learning process to be most discriminative of particular words (or groups of words).

Neural word embeddings are often considered opaque and uninterpretable, unlike sparse vector space representations in which each dimension corresponds to a particular known context, or LDA models where dimensions correspond to latent topics. While this is true to a large extent, they observe that SKIPGRAM does allow a non-trivial amount of introspection. Although they cannot assign a meaning to any particular dimension, they can indeed get a glimpse at the kind of information being captured by the model, by examining which contexts are “activated” by a target word.

Reference

- Paper

- How to use html for alert