This is a brief summary of paper for me to study and arrange it, Better Word Representations with Recursive Neural Networks for Morphology (Luong et al., CoNLL 2013) I read and studied.

They said that The existing methods treat each full-form word as indentity entity adn fail to capture the explicit relationship among morphologidcal variants of a word.

These models have no capability of building representations for ansy new unseen word comprised of known morphemes.

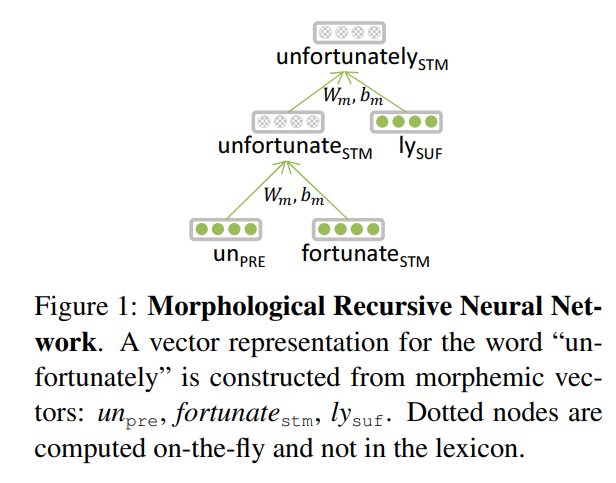

Therefore, they propose Recursive Neural Network as compostional function form morphemes to a word.

In orther words, this model focuses on contructing word vector from its morphemes without the context information which used for the distributed representataion of the word.

The context-insensitive morphological RNN

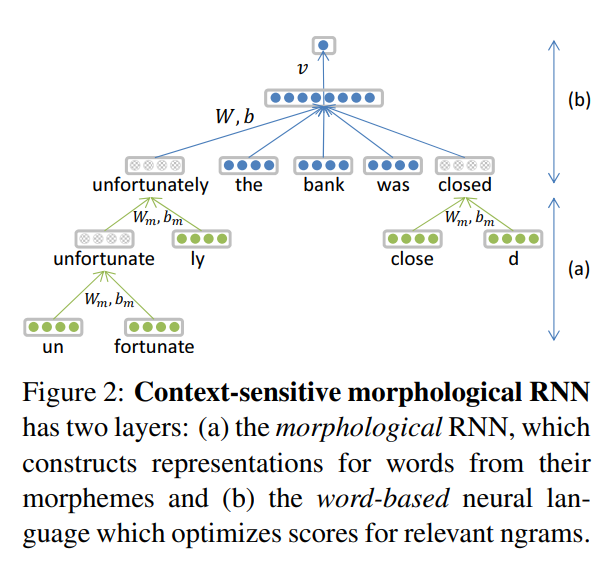

The following based on ngram neural language model has two layer. The first is to construct word embedding from its morphemes and the second is n gram neural network with ranking-type cost function.

The context-sensitive morphological RNN below separates morphemes(stem + affix) and word which is the minimum meaning-bearing unit.

They train the models above with ranking-type cost function to minimize in defining their objective funtion as below:

\[J(\theta) = \sum_{i=1}^N max\{0, 1 - s(n_i) + s(\bar n_i)\}\]Here, N is the number of all avaliable ngrams in the training corpus, whereas \(\bar n_i\) is a corrected ngram created from \(n_i\) by replacing its last word with a random word.

The paper: Better Word Representations with Recursive Neural Networks for Morphology (Luong et al., CoNLL 2013)

Reference

- Paper

- How to use html for alert