This is a brief summary of paper for me to study and organize it, An efficient framework for learning sentence representations (Logeswaran and Lee., ICLR 2018) I read and studied.

They propose simple method to represent a sentence into a fixed-length vectors they call Quick Thought Vectors

The method is similar to skip-gram method of word2vec. i.e. they extend the skip-gram to sentence-level.

Unlike skip-thought vector, the differences is they use only an encoder to represent a sentence into a fixed-length vector using RNN types (here GRU).

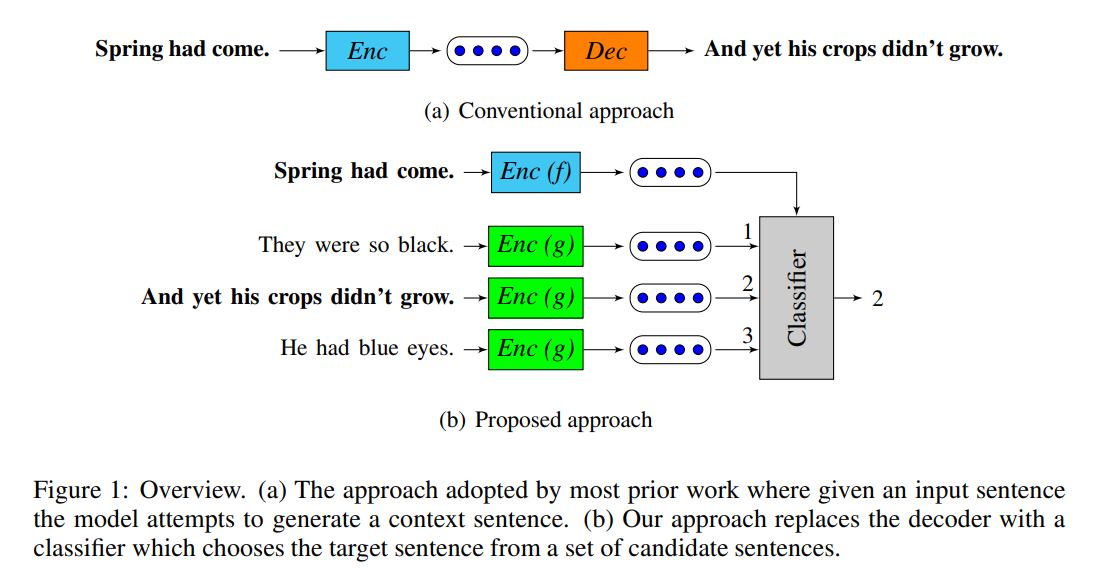

They turn the part of decoder to generate adjacent sentences into discriminative approximation which is whether the context sentence is adjacent to input sentence.

The method is as follows:

Note(Abstract):

In this work they propose a simple and efficient framework for learning sentence representations from unlabelled data. Drawing inspiration from the distributional hypothesis and recent work on learning sentence representations, they reformulate the problem of predicting the context in which a sentence appears as a classification problem. Given a sentence and its context, a classifier distinguishes context sentences from other contrastive sentences based on their vector representations. This allows them to efficiently learn different types of encoding functions. Their method is calle qucik-thougt vectors.

speedup in training time.

Download URL:

The paper: An efficient framework for learning sentence representations (Logeswaran and Lee., ICLR 2018)

The paper: An efficient framework for learning sentence representations (Logeswaran and Lee., ICLR 2018)

Reference

- Paper

- How to use html for alert

- For your information

- Github of An efficient framework for learning sentence representations. Lajanugen Logeswaran and Honglak Lee. ICLR 2018

- word2vec Explained: deriving Mikolov et al.’s negative-sampling word-embedding method on Goldberg and Levy arXiv 2014

- Word2Vec Tutorial Part 2 - Negative Sampling on Chris McCormick blog

- Optimize Computational Efficiency of Skip-Gram with Negative Sampling on aegis4048 blog

- How does sub-sampling of frequent words work in the context of Word2Vec? on quora

- word2vec: negative sampling (in layman term)? on stackoverflow

- Learning Word Embedding on Lil’log