This is a brief summary of paper for me to study and organize it, Contextualized Word Embeddings with Paraphrases (Shi et al., EMNLP and IJCNLP 2019) I read and studied.

Retrofitting means semantic speicalization of distrobutional word vector. i.e. already trained vector can be retrofitted with external knowledges like lexicon-semantic knowledge, as known as semantic specialization.

Except for the retrofitting called post-processing retrofitting models, which is fine-tune pre-trained distributional vectors to better reflect external linguistic contraints,

There are tow phases of specialization methods:

1) joint specialization methods, which augment distributional learning objectives with external linguistic constraints, 2) the most recently proposed post-specialization methods that generalize the perturbations of the post-processing methods to the whole distributional space.

However, this paper exaplain how to retrofit context information of paraphrases, suggestting the figure below:

They said

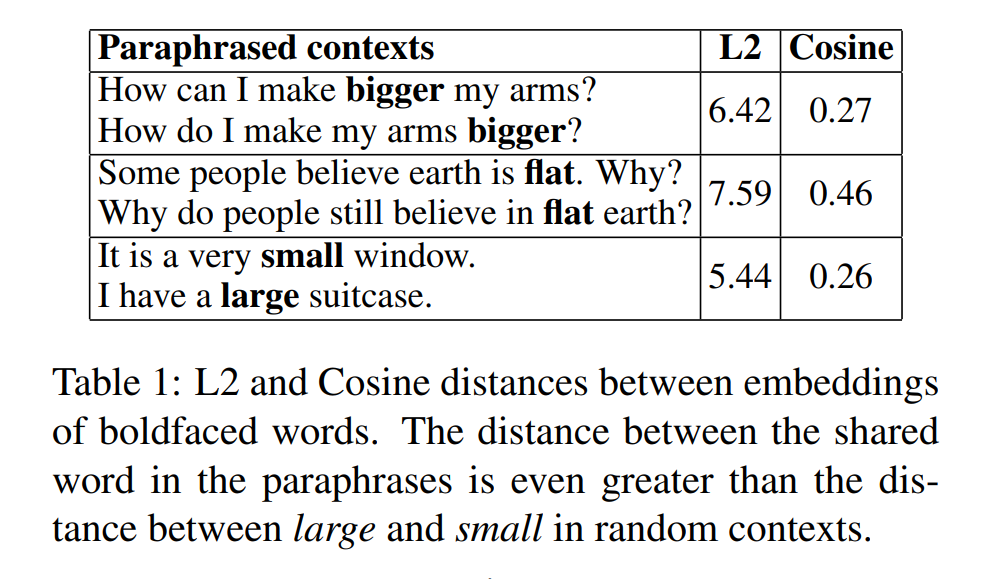

These embedding models encode both words and their contexts and generate context-specific representations. While contextualized embeddings are useful, we observe that a language model-based embedding model, ELMo, cannot accurately capture the semantic equivalence of contexts.

Without retraining the parameters of an existing model, In order to learns the transformation to minimize the difference of the contextualized representations of the shared word in paraphrased contexts, while differentiating between those in other contexts.

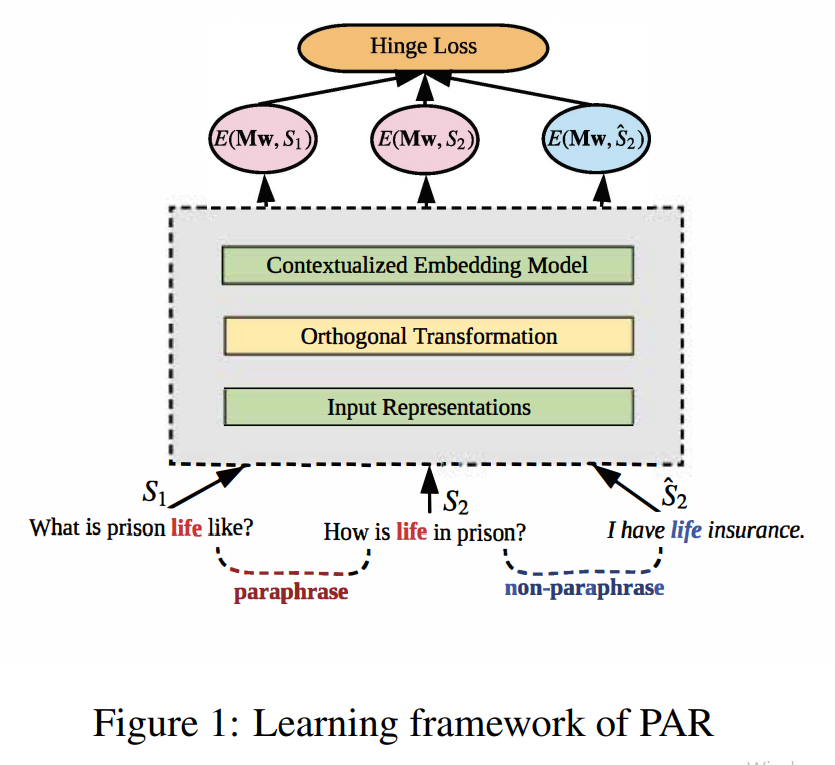

They propose the model called paraphrase-aware Retrofitting (PAR) below:

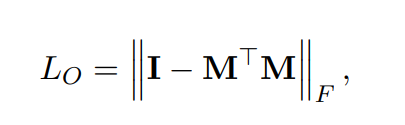

PAR learns an orthogonal transformation $M \in \mathbbR^{k*k}$ to reshap the input representation into a specific space to complement the collocate.

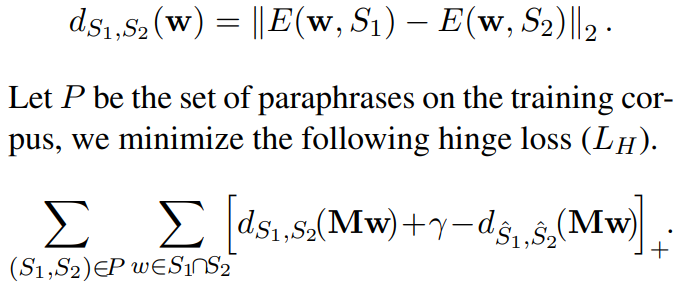

Given two contexts S1 and S2 that both contain a shared word w, the contextual difference of a input representation w is defined by the L2 distance:

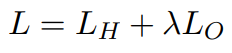

The training objective of PAR is the dentoed as follows:

The paper: Retrofitting Contextualized Word Embeddings with Paraphrases (Shi et al., EMNLP and IJCNLP 2019)

Reference

- Paper

- How to use html for alert

- For your information