This is a brief summary of paper for me to study and organize it, Enhanced LSTM for Natural Language Inference (Chen et al., ACL 2017) I read and studied.

This paper is a research related to Natural Language inference task.

Entailment task is to predict whether the two sentences are entailment, contradiction, and neutral.

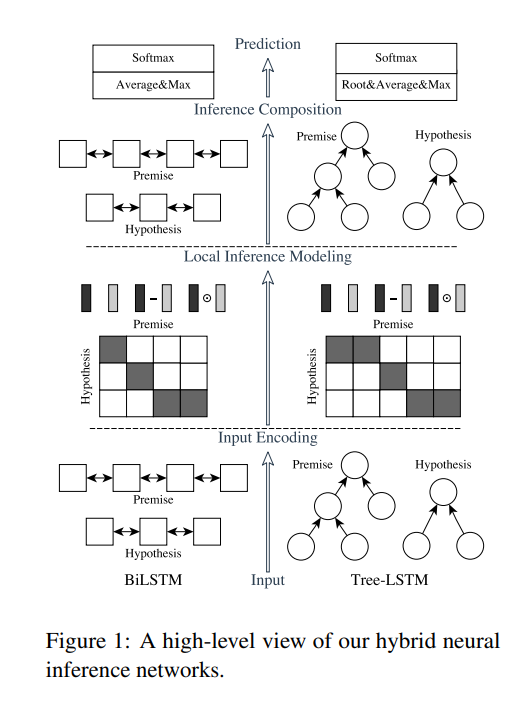

Their model is called ESIM (Enhanced Sequential Inference Model) as in the left below figure.

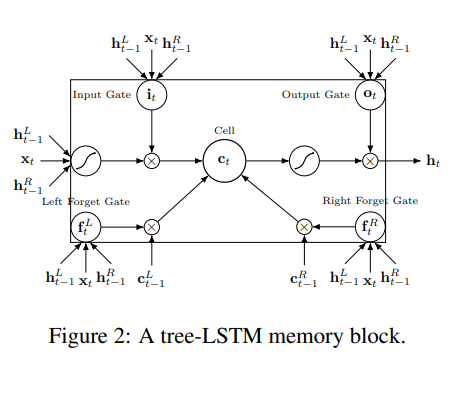

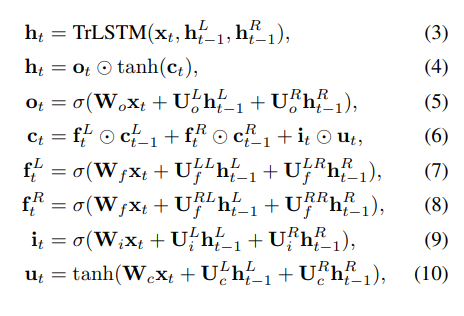

In here, they used tree-LSTM as follows:

They domonstrates using syntactic parsing information contribute to their best result with tree-LSTM.

They use bidirectional LSTM to ecode a word itself and its context, and then they extraced the local inference information with attention mechanism.

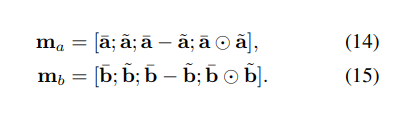

Before classifying, in order to enhance local inference information, they compute the difference and the elemnt-wise product for the tuple $<a^{-},a^{~}>$ as well as for $<b^{-},b^{~}>$, where $a^{-} and b^{-}$ is the output of bidirectional LSTMs on a premise and a hypothesis respectively.

Also they used Bidirectional LSTM to compose local inferences into a fixed-lenght vector:

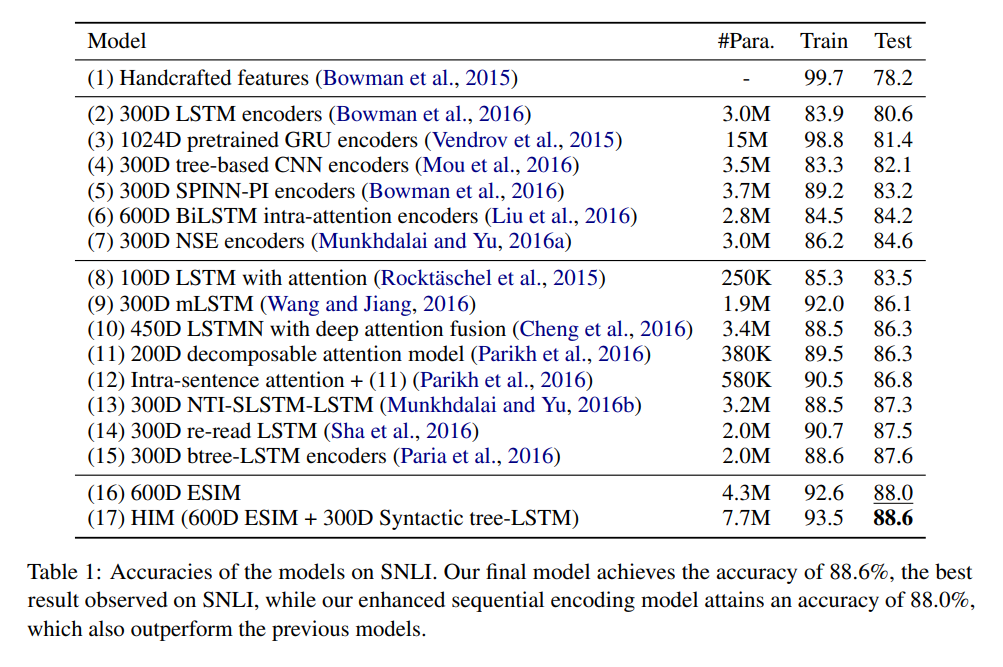

Also this paper showed the a variety of sentence embedding for Natural languae Inference task, suggesting their model outputperform the previous models: