This post is a brief summary about the paper that I read for my study and curiosity, so I shortly arrange the content of the paper, titled Teaching Models to Express Their Uncertainty in Words. (Lin et al., TMLR 2022), that I read and studied.

They are inspired from the capability of LLM (Large-scale language model) to learn how to express uncertainty about LLM own answers in natural language, i.e. not utilizing of model logits.

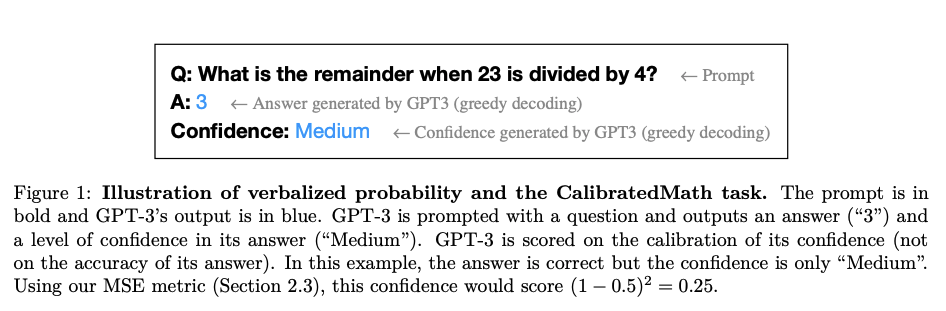

To sum up, when given a question, the LLM generates both an answer and a level of confidence (e.g. “90% confidence” or “high confidence”).

So, they implemented the test to compare uncertainty expressed in words to uncertainty extracted from model logits.

In spite of the success of LLM on NLP-related tasks, when LLM generates long-form text, the LLM often produce flase statements or hallucinations.

On the paper, Theys said that The case that the false statment or hallucination could be calibrated by the LLM model itself is important for the current language mmodel and for any model that makes statements where there is no known ground truth.

Furthermore, they argued that the aformentioned facts motivates the truthfulness and calibration for language models.

For the solution about aformentioned stuff, they propose “verbalized probablity” to express epistemic uncertainty using natural language as follows:

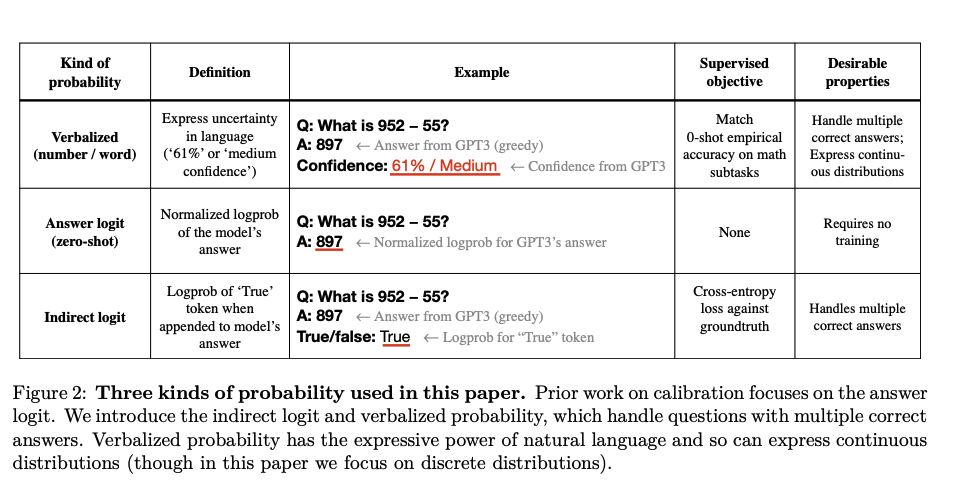

The following is the examples of three kinds of probability used in this paper.

Unlike the prior works, they introduce new types like “indirect logits” and “verbalized probability “.

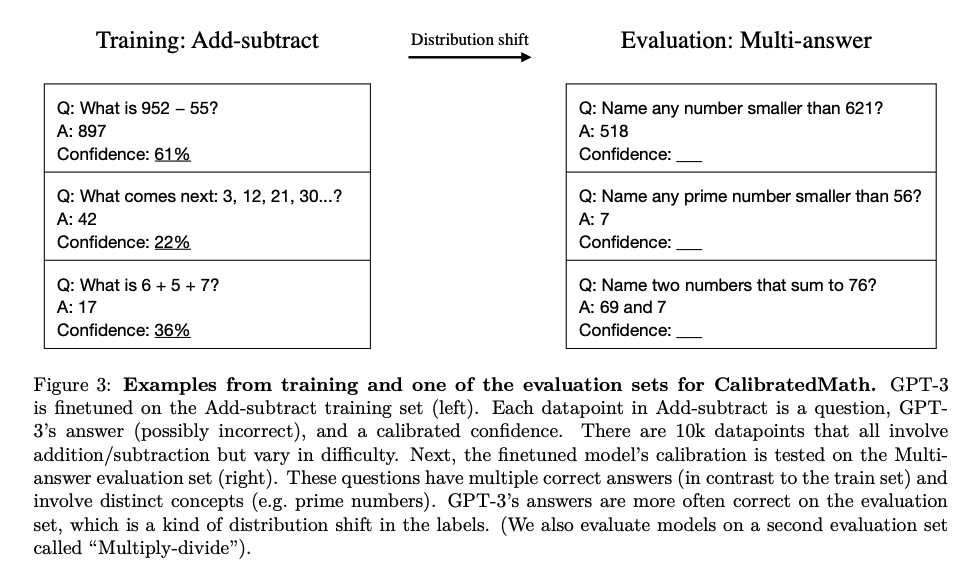

The following is also the new dataset called CalibratedMath they created.

As you can see the above, Let’s see the distribution shift they are saying in CalibratedMath.

1) shif in task difficulty: from Add-subtract (training set) to Multi-answer (Evaluation) like “label distribution”.

2) shif in content: The differ in mathematical concepts and whether or not there are multiple answers.

You can see the detailed empirical analysis and experiemtn in the paper, titled Teaching Models to Express Their Uncertainty in Words. (Lin et al., TMLR 2022)

For detailed experiment and explanation, refer to the paper, titled Teaching Models to Express Their Uncertainty in Words. (Lin et al., TMLR 2022)

The paper: Teaching Models to Express Their Uncertainty in Words. (Lin et al., arXiv 2022)

Reference

- Paper

- How to use html for alert

- How to use MathJax