This post is a brief summary about the paper that I read for my study and curiosity, so I shortly arrange the content of the paper, titled Contrastive Decoding: Open-ended Text Generation as Optimization (Li et al., ACL 2023), that I read and studied.

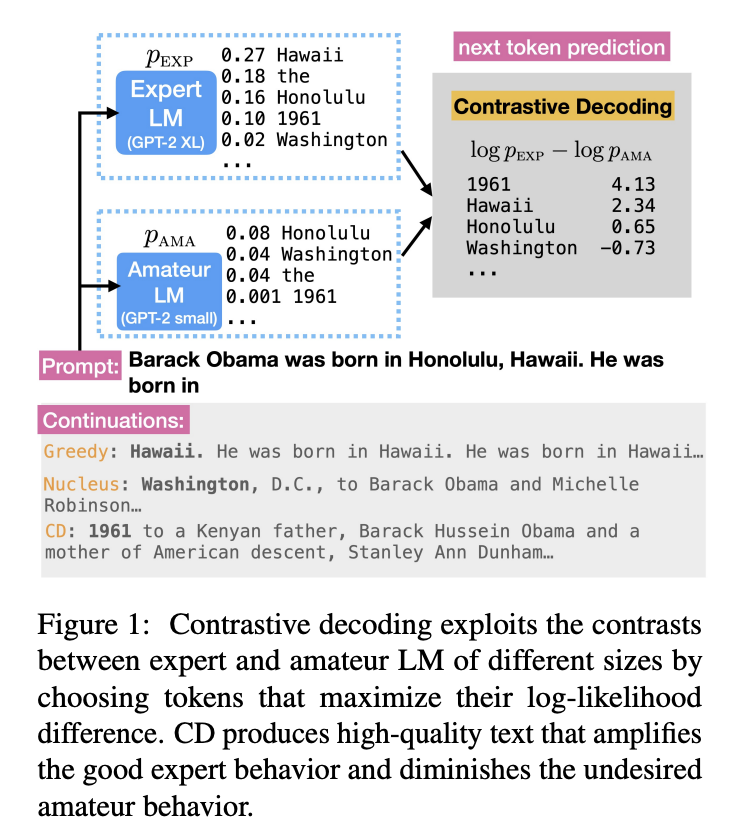

This paper provided the decoding strategy based on search called Contrastive Decoding (CD) as followings:

Specifically, The paper said that The CD objective rewards text patterns favored by the large expert LMs and penalizes patterns favored by the small amateur LMs.

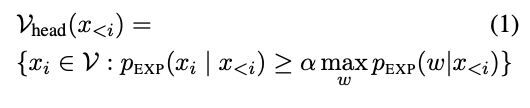

However, amateur LMs are not always mistaken: small language models still capture many simple aspects of English grammar and common sense (e.g., subject verb agreement). Thus, penalizing all behaviors from amateur LMs indiscriminately would penalize these simple aspects that are correct (False negative), and conversely reward implausible tokens (False positive).

To takcle the aformentioned issue, they propose an adaptive plausibility constraint as follows:

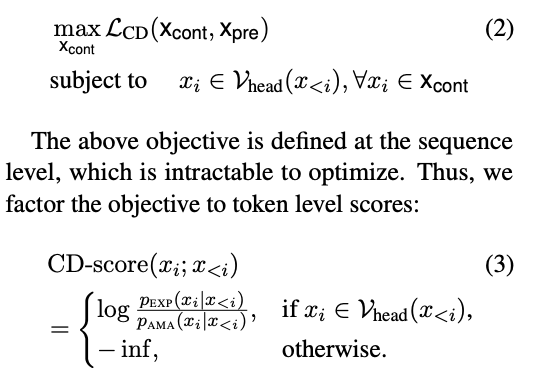

So, The total formaula is the following:

You can see the detailed empirical analysis and experiemtn in the paper, titled Contrastive Decoding: Open-ended Text Generation as Optimization (Li et al., ACL 2023)

For detailed experiment and explanation, refer to the paper, titled Contrastive Decoding: Open-ended Text Generation as Optimization (Li et al., ACL 2023)

The paper: Contrastive Decoding: Open-ended Text Generation as Optimization (Li et al., ACL 2023)

Reference

- Paper

- How to use html for alert

- How to use MathJax