This is a brief summary of paper for me to study and organize it, Deep Semantic Role Labeling: What Works and What’s Next (He et al., ACL 2017) that I read and studied.

Semantic role labeling (SRL) systems aim to recover the predicate-argument structure of a sentence, to determine essentially “who did what to whom”, “when”, and “where.”

Recently breakthroughs involving end-to-end deep models for SRL without syntactic input seem to overturn the long-held belief that syntactic parsing is a prerequisite for this task.

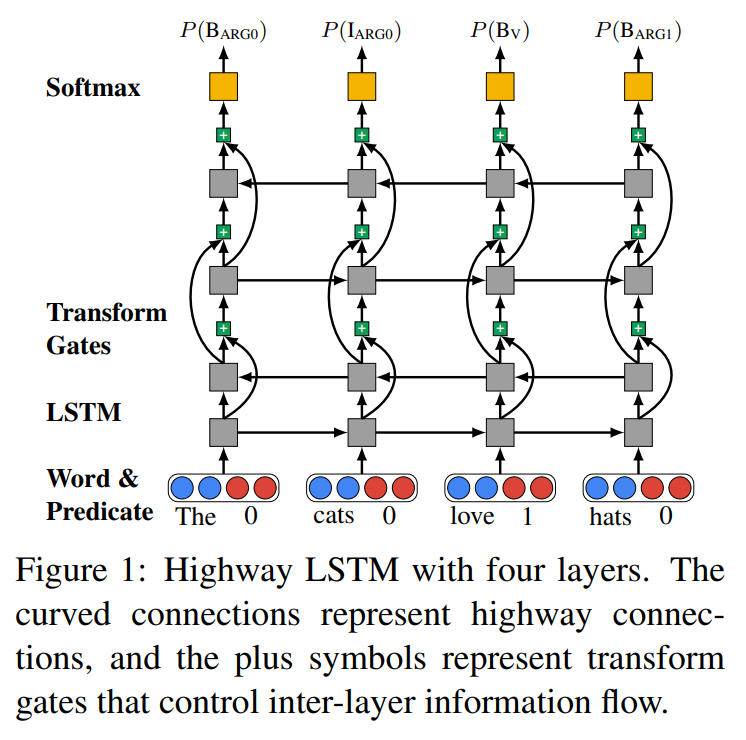

So treating SRL as a BIO tagging problem, they propose end-to-end deep neural model with bidirectional LSTMs as follow:

As shown in the figure above, the bidirectional LSTMs they used in their experiment is alternative structure.

Formally, their task is to predict a sequence \(y\) given a sentence-predicate pair \((w, v)\) as input. Each \(y_i \in y\) belongs to a discrete set of BIO tags \(T\).

Words outside argument spans have the tag \(O\), and words at the beginning and inside of argument spans with role \(r\) have the tags \(B_r\) and \(I_r\) respectively.

Let \(n = |w| = |y|\) be the length of the sequence.

They also used a variety of deep learning techiniques:

- highway connection

- RNN-Dropout

- A* decoding algorithms

- some constraints: BIO constraints, SRL constraints, Syntactic constraints

For detailed experiment analysis and in-depth model architectur and constraints, you can found in Deep Semantic Role Labeling: What Works and What’s Next (He et al., ACL 2017)

The paper: Deep Semantic Role Labeling: What Works and What’s Next (He et al., ACL 2017)

Reference

- Paper

- How to use html for alert