This is a brief summary of paper for me to study and arrange for Normalized Word Embedding and Orthogonal Transform for Bilingual Word Translation (Xing et al., NAACL 2015) I read and studied.

This paper propose handling the inconsistence among the objective function used to learn word vectors (maximum likelihood based on inner product), the distance measurement for word vectors (cosine distance), and the objective function used to learn the linear transform(mean square error). Conjecturing this inconsistence may lead to suboptimal estimation for both word vectors and the bilingual transform.

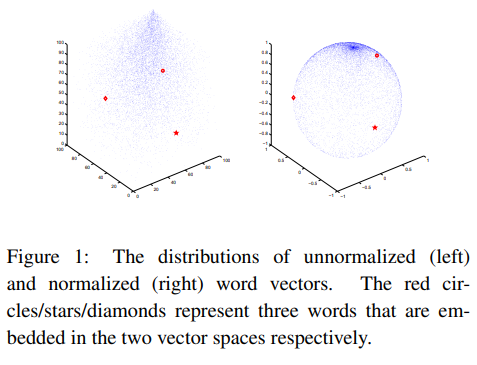

So, they solved the inconsistence by normalizing the word vectors. Specifically, the word vectors are enforced to be in a unit length during the learning of the embedding. By this constraint, all the word vectors are located on a hypersphere and so the inner product falls back to the cosine distance:

This hence solves the inconsistence between the embedding and the distance measurement. To reflect the normalization constraint on word vectors, the linear transform in the bilingual projection has to be constrained as an orthogonal transform. Finally, the cosine distance is used when we train the orthogonal transform, in order to achieve full consistence

They prove their method’s performance on word similarity task and bilingual word traslation which can be categorized into projection-based approaches and regularizationbased approaches.

The paper: Normalized Word Embedding and Orthogonal Transform for Bilingual Word Translation (Xing et al., 2015 NAACL)