This is a brief summary of paper for me to study and organize it, Japanese and Korean Voice Search (Schuster and Nakajima., ICASSP 2012) I read and studied.

I was interested in WordPiece model. The reason is When I read the paper published by Google they used WordPiece model.

For example, when I read BERT and translation papers, They use input tokens with WordPiece model.

So I found the paper about WordPiece model from voice search reseach.

In this paper, when they tokenized word to word unit, they doesn’t consider the semantic information.

So they wanted to make tokenizer not dependent on language and solving the out-of-vocabulary problem.

The model, called WordPieceModel learns word units form large amounts of text automatically and incremetally by running a greedy algorithm as follows:

They said :

- Initialize the word unit inventory with the basic Unicode characters (Kanji, Hiragana, Katakana for Japanese, Hangul for Korean) and including all ASCII, ending up with about 22,000 for Japanese total and 11,000 for Korean.

- Build a language model on the training data using the inventory from 1.

- Generate a new word unit by combinning two units out of the current word inventory to increment the word unit inventory by one. Choose the new word unit out of all possible ones that increase the likelihood on the training data the most when added to the model.

- Goto 2 until a predefined limit of word units is reached or the likelihood increase falls below a certain threshold.

Based on the WordPieceModel, its algorithm give deterministic segmentations.

In order to be able to learn where where to put spaces from the data and make it part of the decoding strategy they used the following:

- The orignal language dmoel data is used as written, meaning some datat with, some without spaces.

- when segmenting the LM data with WordPieceModel attach a space marker (they used an underscore or a tilde) before and after each unit when you see a space, otherwise don’t attach. Each word unit can hence appear in four different forms, with underscore on both sides (there was a space originally on both sides in the data), with a space marker only on the left or only the right, and without space markers at all. The word inventory si now larger, possibly up to four times the original size if every combination exists.

- Biuld LM and dictionary based on this new inventory.

- During decodding the best path according to the model will be chosen, which preserves where to put spaces and where not. The attached space markers obviously have to be filtered out from the decoding output for correct display Assuming. The common scenario where the decoder puts automatically a space between each unit in the output string this procedure the is :

(a) Remove all spaces (“ “ -> “”)

(b) Remove double space marker by space (“__” -> “ “)

(c) Remove remaining single space markers (“_” -> “”)

This last step could potentially be also a replacement by space as this is the rare case when the decoder hypothesize a word unit with space followed by one without space or vice versa.

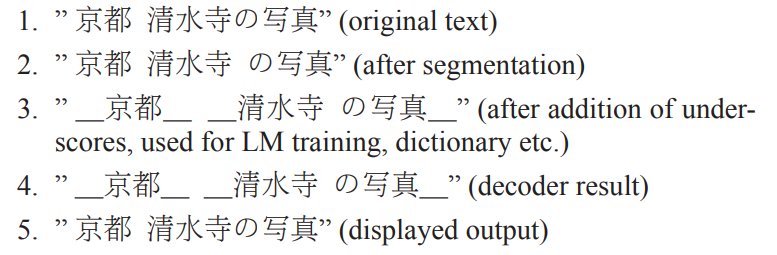

Let’s see an exmaple explained in their paper:

They showed an example of the segmentation and gluing proceduer-note that the original and final texts contain where there is a space.

(1) Janpanese original unsegmented text

(2) After segementation

(3) the addition of underscores to retain location of spaces

(4) a possible raw decoder result

(5) with the final result after removing underscores

Also, they said:

this techniques are successfully used for some Asian languages (Japanese, Korean, Mandarin) across multiple applications, Voice Search, dictation as well as YouTube caption generation.

And Google release the extension of WordPieceModel, it is called SentencePiece.

If you want to know about SentencePiece in detail, visit here.

If you can also use the version of python, type in as follows:

For installation of pythone module,

SentencePiece provides Python wrapper that supports both SentencePiece training and segmentation.

You can install Python binary package of SentencePiece with.

% pip install sentencepiece

Reference

- Paper

- How to use html for alert

- For your information

-

Korean ver.

Below is youtube about Korean tokenizing for your information but the person of youtube are speaking in Korean.