This is a brief summary of paper for me to study and organize it, Convolutional Neural Networks for Sentence Classification (Kim., EMNLP 2014) I read and studied.

They propose CNN architecture on top two sets of pre-training word2vecs, static and dynamic one.

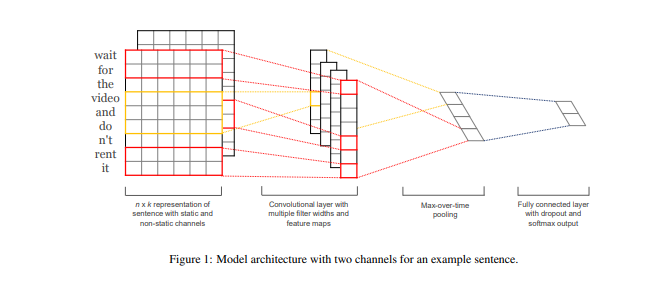

Their architecture is the followings:

For two sets of pre-training word vectors, one of word2vecs is kept static throughout training training and another is fine-tuned via backpropagation.

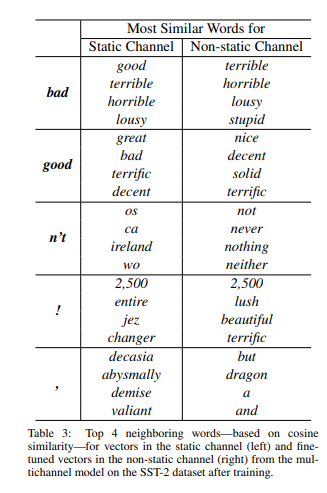

The following is examples of top 4 neighboring words based on cosine similarity for static channel(left) and fine-tuned vectors in the non-static channel (right) for multichannel model.

Note(Abstract):

They report on a series of experiments with convolutional neural networks (CNN) trained on top of pre-trained word vectors for sentence-level classification tasks. They show that a simple CNN with little hyperparameter tuning and static vectors achieves excellent results on multiple benchmarks. Learning task-specific vectors through fine-tuning offers further gains in performance. They additionally propose a simple modification to the architecture to allow for the use of both task-specific and static vectors.

Download URL:

The paper: Convolutional Neural Networks for Sentence Classification (Kim., EMNLP 2014)

The paper: Convolutional Neural Networks for Sentence Classification (Kim., EMNLP 2014)

Reference

- Paper

- How to use html for alert

- For your information