This is a brief summary of paper for me to study and organize it, Modeling Rich Contexts for Sentiment Classification with LSTM (Huang et al., arXiv 2016) I read and studied.

They propose how to get representation with rich context in tweets which constitute a thread like conversation.

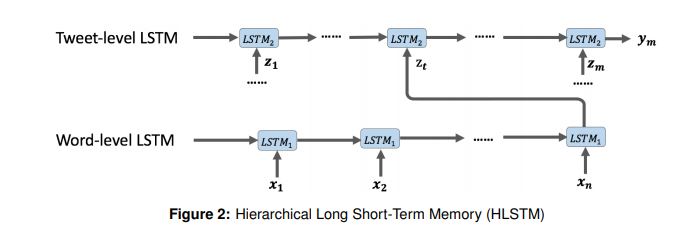

They used two types of LSTM, one is Word-level LSTM, the other is Tweet-level LSTM as above figure.

The word-level encode a tweet into the fixed-size vector.

and the Tweet-level use the vectors from word-level LSTM as input.

In other word,

From Figure 2 we can see that in the word-level LSTM, the input is individual words.

The hidden state of the last word is taken as the representation of the tweet.

Each tweet in a thread will go through the word-level LSTM, and the t-th tweet in the thread will generate a tweet representation of zt which will be an input of the tweet-level LSTM.

And the extend input of tweet level LSTM with context featues which represent as binary value.

The additional context feature is as follows:

- SameAuthor

- Converstaion

- SameHashtag

- SameEmoji

Modeling Rich Contexts for Sentiment Classification with LSTM (Huang et al., arXiv 2016)

Reference

- Paper

- How to use html for alert