This post is a brief summary about the paper that I read for my study and curiosity, so I shortly arrange the content of the paper, titled Search-R1 - Training LLMs to Reason and Leverage Search Engines with Reinforcement Learning (Jin et al. arXiv 2025), that I read and studied.

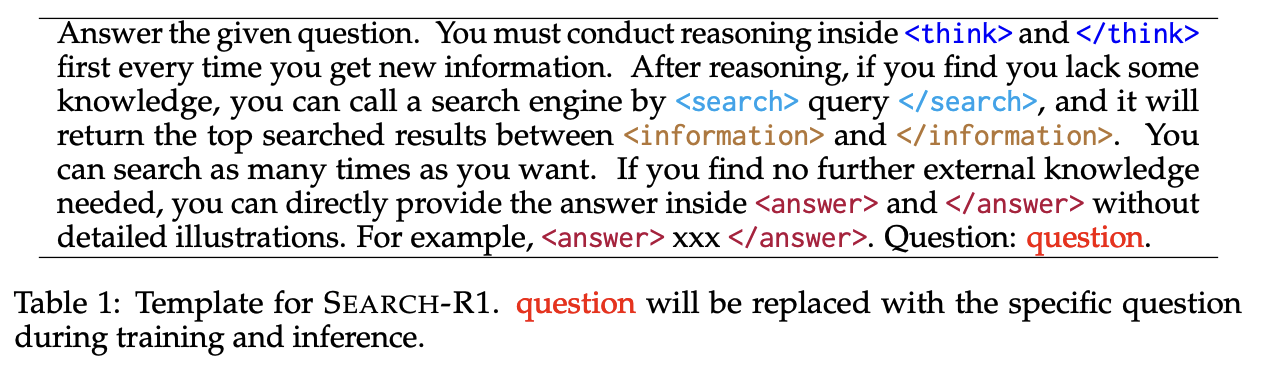

The following is the example of prompt for Reinforcement Learning on Searh-R1.

For detailed experiment and explanation, refer to the paper, titled Search-R1 - Training LLMs to Reason and Leverage Search Engines with Reinforcement Learning (Jin et al. arXiv 2025)

Note(Abstract):

Efficiently acquiring external knowledge and up-to-date information is essential for effective reasoning and text generation in large language models (LLMs). Prompting advanced LLMs with reasoning capabilities to use search engines during inference is often suboptimal, as the LLM might not fully possess the capability on how to interact optimally with the search engine. This paper introduces Search-R1, an extension of reinforcement learning (RL) for reasoning frameworks where the LLM learns to autonomously generate (multiple) search queries during step-by-step reasoning with real-time retrieval. Search-R1 optimizes LLM reasoning trajectories with multi-turn search interactions, leveraging retrieved token masking for stable RL training and a simple outcome-based reward function. Experiments on seven question-answering datasets show that Search-R1 improves performance by 41% (Qwen2.5-7B) and 20% (Qwen2.5-3B) over various RAG baselines under the same setting. This paper further provides empirical insights into RL optimization methods, LLM choices, and response length dynamics in retrieval-augmented reasoning. The code and model checkpoints are available at [this https URL](https://github.com/PeterGriffinJin/Search-R1).

Download URL:

The paper: Search-R1: Training LLLMs to Reason and Leverage Search Engines with Reinforcement Learning (Jin et al. arXiv 2024)

The paper: Search-R1: Training LLLMs to Reason and Leverage Search Engines with Reinforcement Learning (Jin et al. arXiv 2024)

Reference

- Paper

- For your information

- How to use html for alert

- How to use MathJax