This is a brief summary of paper for me to study and organize it, Language Modeling Teaches You More Syntax than Translation Does: Lessons Learned Through Auxiliary Task Analysis (Zhang and Bowman, under review in ICLR 2019) that I read and studied.

They investigated how well LSTM-based models with various training method induces syntactic information into their hidden representation, following that recently, researchers have begun to investigate the properties of learned representations by training auxiliary classifiers that use the hidden states of frozen, pretrained models to perform other tasks..

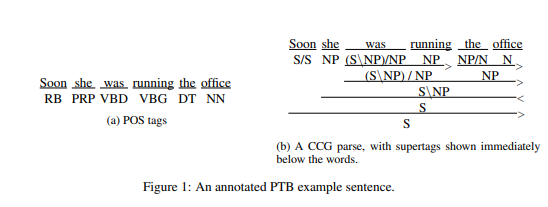

In order to analyze the property of how well they encode syntactic information, they implemented tasks such as POS tagging, CCG supertagging, and word idetity prediction.

Let’s see What is the POS tagging and CCG supertaging task, they are:

for word identity prediction task, it is :

for the sentence “I love NLP” and a time step shift of -2, we would train the classifier to take the hidden state for “NLP” and predict the word “I”.

for LSTM-based models to experiment how well they encode syntactic information into their hidden representation, they use bidirectional Language model, neural machiane translation, skip-thought, autoencoding, and untrained LSTM.

Their experiments focus on questions below:

- How does the training task affect how well models learn syntactic properties? Which tasks are better at inducing these properties?

- How does the amount of data the model is trained on affect these results? When does training on more data help?

They investigate these questions by holding the data source and model architecture constant, while varying both the training task and the amount of training data.

Specifically, they examine models trained on English-German (En-De) translation, language modeling, skip-thought (Kiros et al.,2015), and autoencoding, and also compare to an untrained LSTM model as a baseline.

by controlling for the data domain by exclusively training on datasets from the 2016 Conference on Machine Translation, they make fair comparisons between several data-rich training tasks in their ability to induce syntactic information.

They found that bidirectional language models (BiLMs) do better than translation and skip-thought encoders at extracting useful features for POS tagging and CCG supertagging.

Moreover, this improvement holds even when the BiLMs are trained on substantially less data than competing models.

Especially, for word identity prediction, they found that all trained LSTMs underperform untrained ones on word identity prediction.

This finding confirms that trained encoders genuinely capture substantial syntactic features, beyond mere word identity, that the auxiliary classifiers can use.

Their results suggest that for transfer learning, BiLMs like ELMo (Peters et al., 2018) capture more useful features than translation encoders—and that this holds even on genres for which data is not abundant.

They also find that randomly initialized encoders extract usable features for POS and CCG tagging at least when the auxiliary POS and CCG classifiers are themselves trained on reasonably large amounts of data.

The performance of untrained models drops sharply relative to trained ones when using smaller amounts of the classifier data.

They investigate further and find that untrained models outperform trained ones on the task of neighboring word identity prediction, which confirms that trained encoders do not perform well on tagging tasks because the classifiers are simply memorizing word identity information.

They also find that both trained and untrained LSTMs store more local neighboring word identity information in lower layers and more distant word identity information in upper layers, which suggests that depth in LSTMs allow them to capture larger context information.

For detailed experiment analysis, you can found in Language Modeling Teaches You More Syntax than Translation Does: Lessons Learned Through Auxiliary Task Analysis (Zhang and Bowman, under review in ICLR 2019)

Reference

- Paper

- How to use html for alert