This is a brief summary of paper for me to study and organize it, RACE: Large-scale ReAding Comprehension Dataset From Examinations (Lai et al., EMNLP 2017) I read and studied.

This paper proposed the new dataset for reading comprehension that they called RACE.

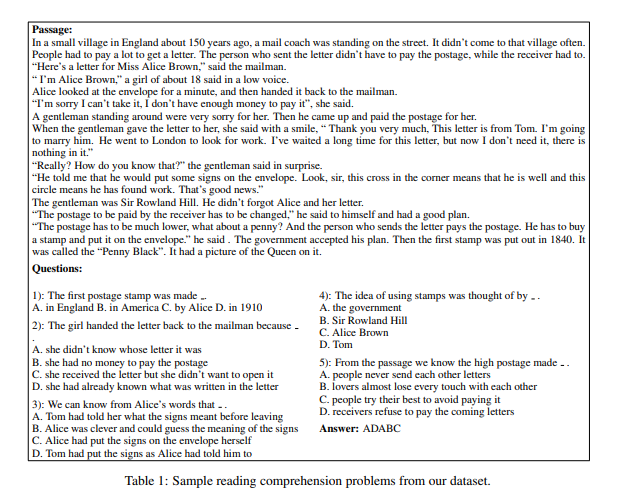

An example of RACE is as follows:

They explain strenghts of their dataset:

The advantages of their proposed dataset over existing large datasets in machine reading comprehension can be summarized as follows:

-

All questions and candidate options are generated by human experts, which are intentionally designed to test human agent’s ability in reading comprehension. This makes RACE a relatively accurate indicator for reflecting the text comprehension ability of machine learning systems under human judge.

-

The questions are substantially more difficult than those in existing datasets, in terms of the large portion of questions involving reasoning. At the meantime, it is also sufficiently large to support the training of deep learning models.

-

Unlike existing large-scale datasets, candidate options in RACE are human generated sentences which may not appear in the original passage. This makes the task more challenging and allows a rich type of questions such as passage summarization and attitude analysis.

-

Broad coverage in various domains and writing styles: a desirable property for evaluating generic (in contrast to domain/style-specific) comprehension ability of learning models.

Also comparing with the existing datasets for reading comprehension task which are MCTest, Cloze-style datasets, Datasets with Span-based Answers, and Datasets from Examinations.

In other words, RACE can be viewed as a larger and more difficult version of the MCTest dataset.

They prevent High noise, which is inevitable in cloze-style datasets due to their automatic generation process, from generating in new reading comprehnesion dataset.

To the best of their knowledge, RACE is the first large-scale dataset of this type, where questions are created based on exams designed to evaluate human performance in reading comprehension

They subdivide reasoning types of the questions:

-

Word matching: The question exactly matches a span in the article. The answer is self-evident.

-

Paraphrasing: The question is entailed or paraphrased by exactly one sentence in the passage. The answer can be extracted within the sentence.

-

Single-sentence reasoning: The answer could be inferred from a single sentence of the article by recognizing incomplete information or conceptual overlap.

-

Multi-sentence reasoning: The answer must be inferred from synthesizing information distributed across multiple sentences.

-

Insufficient/Ambiguous: The question has no answer or the answer

The paper: RACE: Large-scale ReAding Comprehension Dataset From Examinations (Lai et al., EMNLP 2017)