This is a brief summary of paper for me to study and organize it, context2vec: Learning Generic Context Embedding with Bidirectional LSTM (Melamud et al., CoNLL 2016) I read and studied.

This paper shows how to embed sentential context into a fixed-length vector with Bidirectional LSTM.

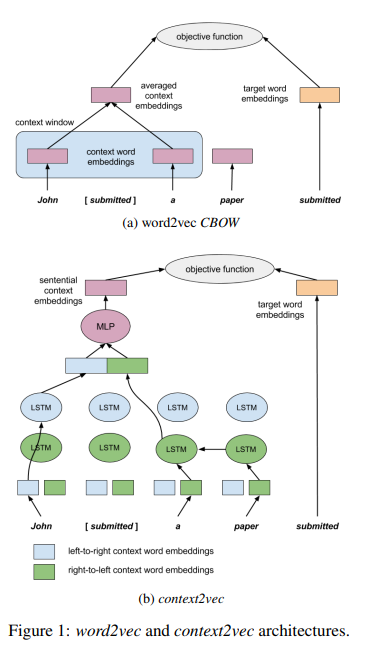

As you can see the figure below, They used a Bidirectional LSTM, feeding one LSTM network with the sentence from left to right, and aother from right to left. The parameter of these two networks are completlely separate, including two separate sets fo left-to-right and right-to-left context word embedding.

So as to represent sentential context to a embedding, they concatenate each context vectors from left to right ant from right to left as shown in figure1 b.

Objective function is how likely the target word vector and sentential context vector is to a pair based on the CBOW method. They used negative sampling technique.

In their embedding, they used two special tokens, BOS and EOS signified as the end and beginning of a sentence.

The paper: context2vec- Learning Generic Context Embedding with Bidirectional LSTM (Melamud et al., CoNLL 2016)

Reference

- Paper

- How to use html for alert