This paper, End-to-end Sequence Labeling via Bi directional LSTM-CNNs-CRF (Ma and Hovy., ACL 2016), proposed a neural network architectural for sequence labeling, which is NER and POS.

Their models is end-to-end models relying on no task-specific resources, feature engineering or data pre-procsessing.

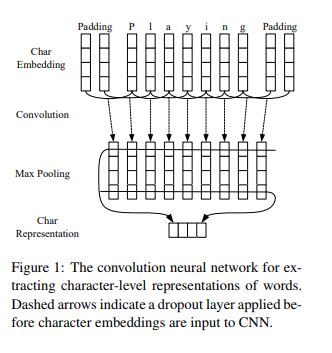

They use convolutiona neural network to extract morphogical information from characters of words and encode it inot neural representations as follows.

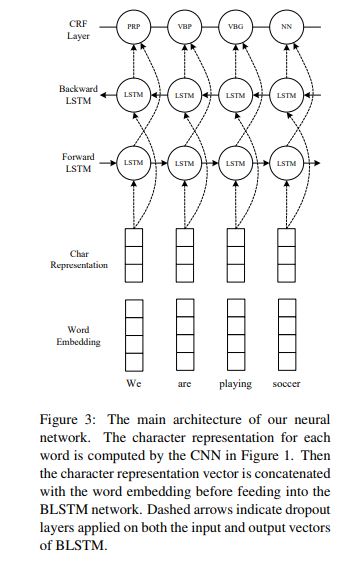

The whole model architecture they argued is the following

As you can see their model above, they used character-level representation by concating word vector as input of Bidirectional LSTM.

on decoding label, they used conditional random field(CRF) which is beneficail to consider the correlations between labels in neighborhoods and jointly decode the best chain of labels for a given input sequence.

They used a variety of deeplearnig technique like dropout and pertrained word embedding.

Also they argued that their model can be further improved by exploring multi-task learnig approaches to combine more useful and correlated information as the future of works.

For example, they said their model can be jointly trained with both the POS and NER tags to improve the intermediate representation learned from their network.

The paper: End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF on arXive version</br> The paper: End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF on ACL version

Reference

- Paper

- How to use html for alert

- Example with tensorflow

- WILDML’s Understanding Convolutional Neural Networks for NLP

- stackexchange cnn translation invariant

- stackoverflow understanding 1D, 2D, 3D in convolutional Neural Network

- CNN 1D, 2D on stackexchange

- Implementing a linear-chain Conditional Random Field (CRF) in PyTorch on towardsdatascience

- HMM, MEMM, and CRF: A Comparative Analysis of Statistical Modeling Methods on llibabacloud

- Maximum Entropy Markov Models and Logistic Regression on David S. Batista blog