This page is brief summary of LSTM Neural Network for Language Modeling (Sundermeyer et al., INTERSPEECH 2012) for my study.

Language model means If you have text which is “A B C X” and already know “A B C”, and then from corpus, you can expect whether What kind of word, X appears in the context.

i.e. The task to predict a word(X) with the context(“A B C”) is the goal of Language model(LM).

In this page, to resovle LM, they used LSTM(long short term memory) neural network.

The existing model to LM has a problem like this :

-

Statistic model is to use frequencies of n-gram to expect the word when you know the past context. however, When you calculate the probability of n-gram based on words. you could encounter the probability not calculated ahead with corpus. So they explained alternative is backing-off model.

- Backing-off model :

- n-gram language model that estimates the conditional probability of a word given its history in the n-gram.

- if there is not n-gram probability, use (n-1) gram probability.

- Backing-off model :

-

Neural network model using vanilla RNN, FeedForward Neural Network.

-

FeedFoward Neural network is to exploit a fixed context.

-

vanilla RNN which is free on the length of context has shorter dependancy to its history because of vanishing gradient.

-

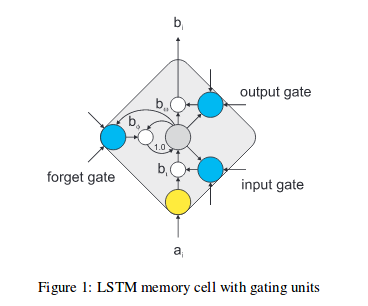

So They used LSTM momory cell which is better version than vanilla RNN about momory capability.

Below is their LSTM variant from the orignal LSTM which is F.A. Gers et al.

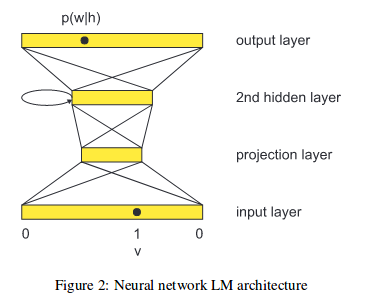

also they used one-hot encoding and projection layer.

The paper: LSTM_Neural_Network_for_Language_Modeling (Sundermeyer et al., INTERSPEECH 2012)