Pooling, in particular Max pooling

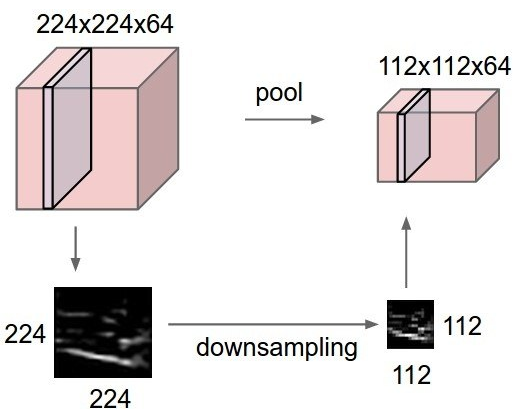

Pooling helps over-fitting by abstracted form of the representation(e.g. image, activation features, etc.).

Basically, pooling is down-sampling as follows.

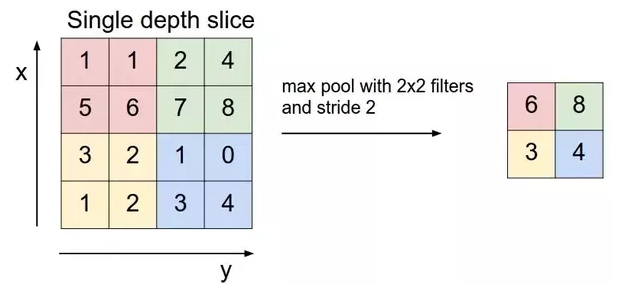

Max pooling performs max pooing on input, i.e. this take maximum value on input per ksize window.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

"""Example code for tf.nn.max_pool(https://www.tensorflow.org/versions/r1.8/api_docs/python/tf/nn/max_pool)

tf.nn.max_pool(

value,

ksize,

strides,

padding,

data_format='NHWC',

name=None

)

"""

import sys

import tensorflow as tf

import numpy as np

print("=== Version checking ===")

print("The version of sys: \n{}".format(sys.version))

print("Tensorflow version: {}".format(tf.__version__))

print("========================")

=== Version checking ===

The version of sys:

3.5.2 (default, Nov 23 2017, 16:37:01)

[GCC 5.4.0 20160609]

Tensorflow version: 1.8.0

========================Before pooling, Let’s expect output’s height and width.

padding scheme is the same when you convolve the data of format NHWC.

If you want another data format, use argument, data_format=”NHWC”,

This value, by default, is the format of NHWC.

The following is the functions which calculate output’s height and width,

If you already know the list of ksize as well as in_height, in_width, and the list of stride.

The list of ksize is similar with filter list on conv2d operation.

Let’s see an example which calculates output’s height and width.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

# max pooling verification after cnn text classification

batch_size = 1

in_height = 5 # the same from sequence length

in_width = 1

in_channels = 1

filter_size = 2

sequence_length = in_height

strides = [1,1,1,1]

filter_height = sequence_length-filter_size+1

filter_width = 1

ksize = [1, filter_height, filter_width, 1]

def check_output_size_with_VALID(in_height, in_width, strides, filter_height, filter_width):

out_height = np.ceil(float(in_height - filter_height + 1) / float(strides[1]))

out_width = np.ceil(float(in_width - filter_width + 1) / float(strides[2]))

print("VAILD padding is no padding")

print("output_height: {}".format(out_height))

print("output_width: {}".format(in_width))

print("What is height and width of output??????b")

check_output_size_with_VALID(in_height, in_width, strides, filter_height, filter_width)

What is height and width of output??????b

VAILD padding is no padding

output_height: 2.0

output_width: 11

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

def check_output_size_with_SAME(in_height, in_width, strides, filter_height, filter_width):

out_height = np.ceil(float(in_height) / float(strides[1]))

out_width = np.ceil(float(in_width) / float(strides[2]))

print("SAME has padding and it the smallest possible padding")

print("output_height: {}".format(out_height))

print("output_width: {}".format(out_width))

if (in_height % strides[1] == 0):

pad_along_height = max(filter_height - strides[1], 0)

else:

pad_along_height = max(filter_height - (in_height % strides[1]), 0)

if (in_width % strides[2] == 0):

pad_along_width = max(filter_width - strides[2], 0)

else:

pad_along_width = max(filter_width - (in_width % strides[2]), 0)

pad_top = pad_along_height // 2

pad_bottom = pad_along_height - pad_top

pad_left = pad_along_width // 2

pad_right = pad_along_width - pad_left

print("pad along height and width...")

print("pad along height: {}".format(pad_along_height))

print("pad along width: {}".format(pad_along_width))

pad_top = pad_along_height // 2 # divied by 2

pad_bottom = pad_along_height - pad_top

pad_left = pad_along_width // 2

pad_right = pad_along_width - pad_left

print("Padding size on top, bottom, left and right")

print("top: {}".format(pad_top))

print("bottom: {}".format(pad_bottom))

print("left: {}".format(pad_left))

print("right: {}".format(pad_right))

print("What is height and width of output???????")

check_output_size_with_SAME(in_height, in_width, strides, filter_height, filter_width)

What is height and width of output???????

SAME has padding and it the smallest possible padding

output_height: 5.0

output_width: 1.0

pad along height and width...

pad along height: 3

pad along width: 0

Padding size on top, bottom, left and right

top: 1

bottom: 2

left: 0

right: 0As you can see the resulting height and width of output from the function above, you could expect the pooling result as follows:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

input_ = tf.get_variable("input_data", shape=[batch_size, in_height, in_width, in_channels], dtype=tf.float32)

pooled_with_valid = tf.nn.max_pool(input_,

ksize=ksize,

strides=strides,

padding="VALID",

name="Pooling_")

pooled_with_same = tf.nn.max_pool(input_,

ksize=ksize,

strides=strides,

padding="SAME",

name="Pooling_")

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

print("""Original input data""")

print(sess.run(input_))

print("""\nMax pooling with VALID Scheme""")

print(sess.run(pooled_with_valid))

print("""\nMAX polling with SAME Scheme""")

print(sess.run(pooled_with_same))

Original input data

[[[[ 0.01192802]]

[[ 0.6658397 ]]

[[-0.25263596]]

[[ 0.14396685]]

[[-0.711636 ]]]]

Max pooling with VALID Scheme

[[[[0.6658397]]

[[0.6658397]]]]

MAX polling with SAME Scheme

[[[[0.6658397 ]]

[[0.6658397 ]]

[[0.6658397 ]]

[[0.14396685]]

[[0.14396685]]]]