While I have studied for Korean Natural Language processing with Neural Network. I was finding the architecture for my work.

So I read this paper,Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation (Wu et al., arXiv 2016) , and I realized about how to dealing with a seqeunce of data.

This paper is end-to-end model for Neural Network translation. In my case, I wondered the architectur of neural network about how to use LSTM for translation.

I was inspired from this paper.

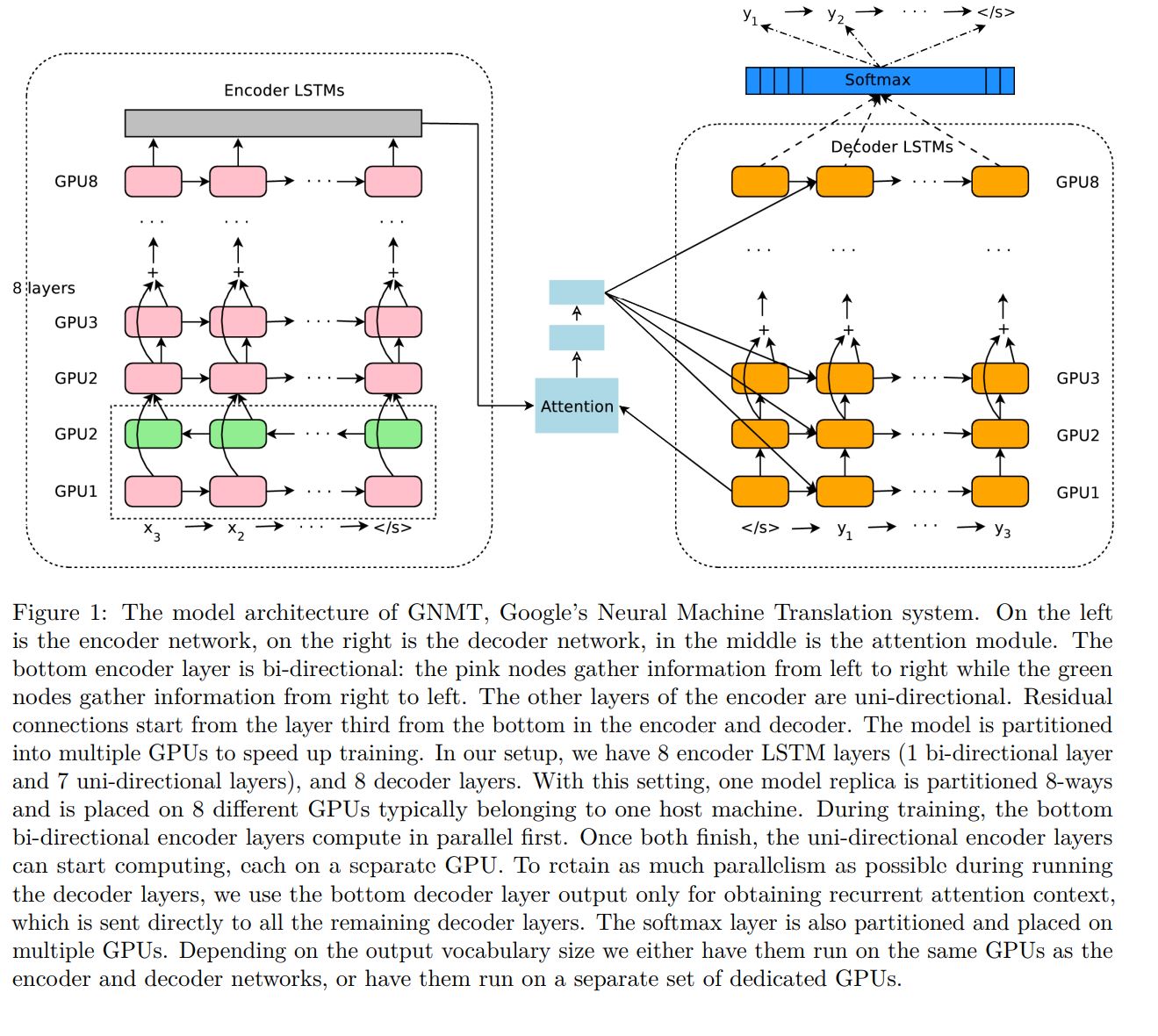

Their basic architecture of neural network translation :

encoder, attention mechanism, and decoder.

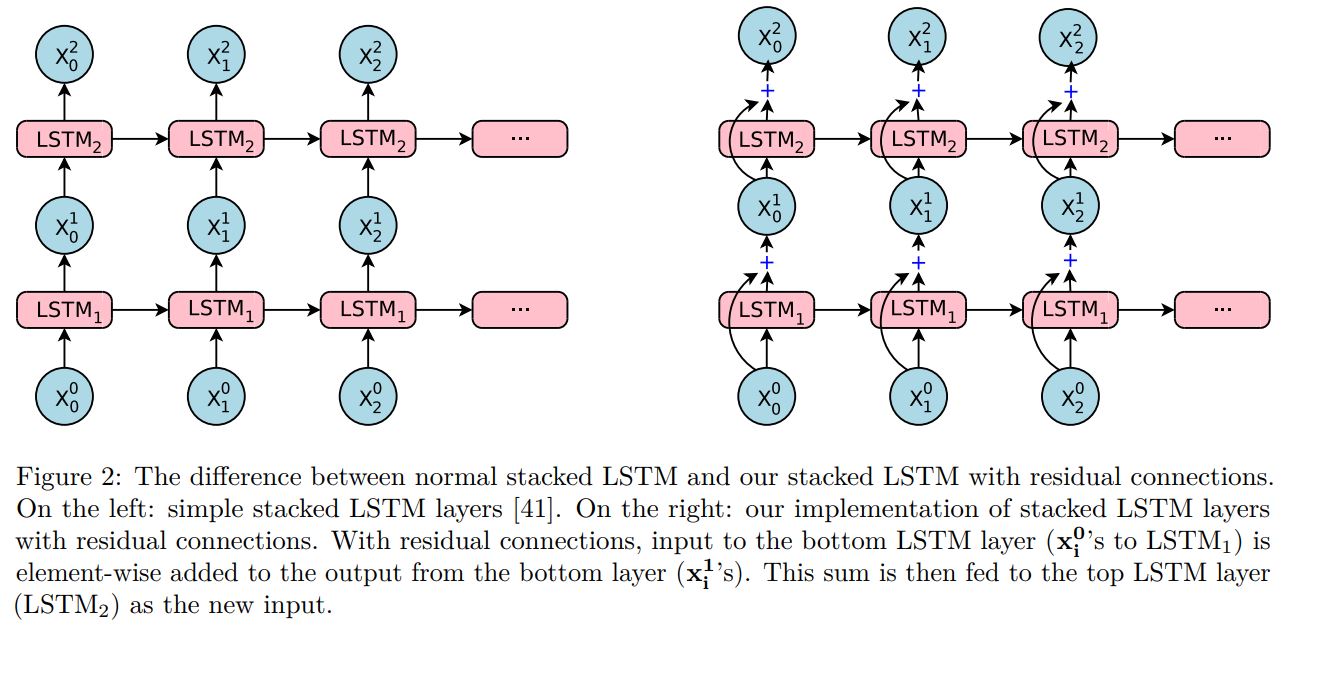

Also they used residual connection like this:

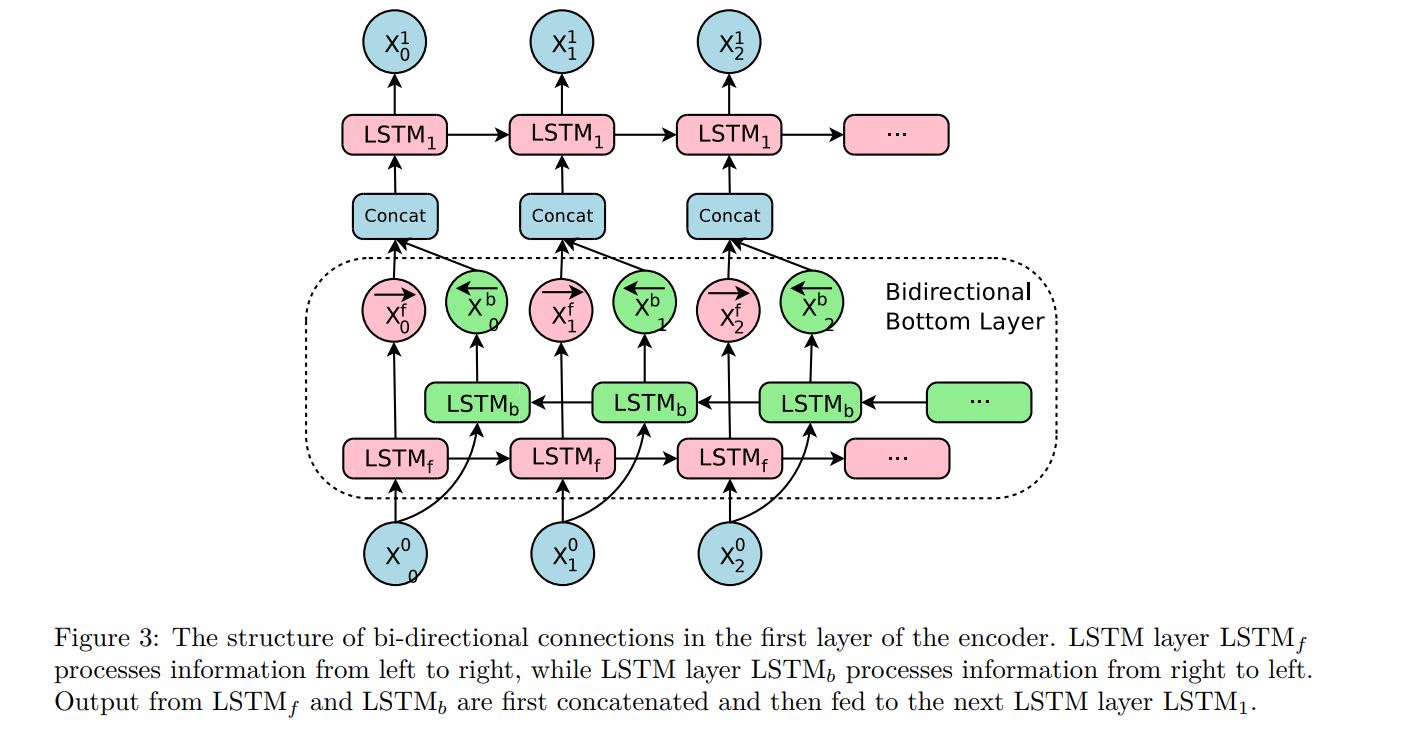

plus, For feature generation as vector, they used Bi-directional LSTM considering long and short dependancy from output.

except for thing above, they said for computational speed, they used parallelism to each layers.

But I am goning to go through in detail here,

Another problem, in particular, of NLP is out-of-vocabulary. i.e. rare word is trouble in open vocabulary system.

In the case of theirs, they used sub-word units like wordpiece model.

• Word: Jet makers feud over seat width with big orders at stake

• wordpieces: _J et _makers _fe ud _over _seat _width _with _big _orders _at _stake_ sign is special mark for the beggining of a word.

If you want to know detail, read 4 section, Segmentation Approaches.