This paper,Extractive Summarization using Continuous Vector Space Models (Kågebäck et al., CVSC-WS 2014), is related to how to summarize document in the way to well extract sentence from input sentences using continuous vector representaion.

But I think this paper helps me understand the architecture base on neural newwork language modeling.

For example, Feed-forwar neural network, Auto-Encoder, and recursive neural network.

The architectur above is as follows:

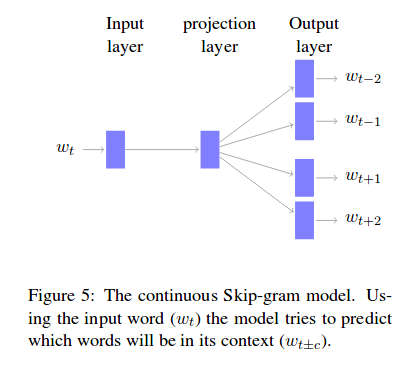

The basic and famous way : skip gram - target word(central of context) is used as input to predict words of window size surrounding it.

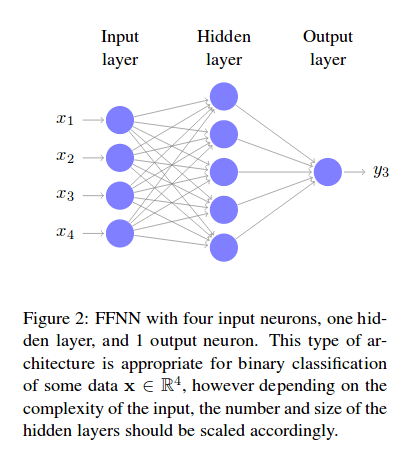

Feed-forward neural network : the direction of dataflow is one way forwards.

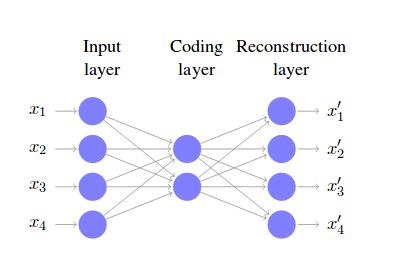

Auto-encoder : based on central of Coding Later, input and output is symmetrically the same.

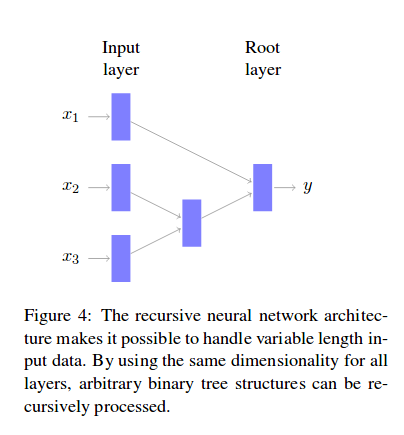

Recursive Neural Network : after parsing a sentence to binary search tree, this is evaluated on Neural Network.

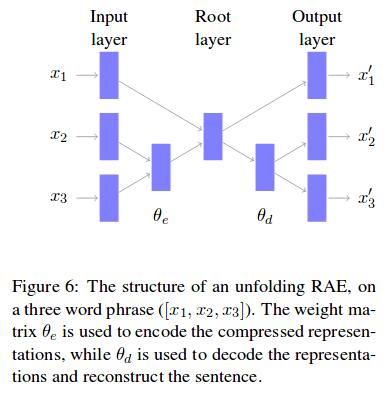

Recursive Auto Encoder : combination of Auto-encoder and Recursive Neural Network

Also, this paper explain how to make sentence vector or phrase vector.

This ideas is so fundanmental way. But with regard to word embedding and phrase embedding, this paper simply explained to me.

i.e. This paper is about explaining extract some sentence to similar summarization of document using word embedding and phrase embedding.

The paper: Extractive Summarization using Continuous Vector Space Models (Kågebäck et al., CVSC-WS 2014)

Reference

- Paper

- How to use html for alert