I cite and refer to NVMe_Express_1.2_Specification document

NVMe Design Overview

A lot of PCIe SSD is based on vendor, so to enable faster adoption of PCIe SSDs, The NVMe standard was defined, NVMe was built from the bottom to up for non-volatile memory over PCIe,

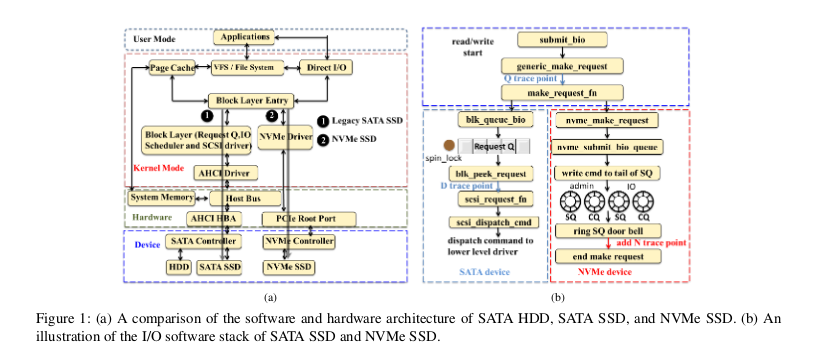

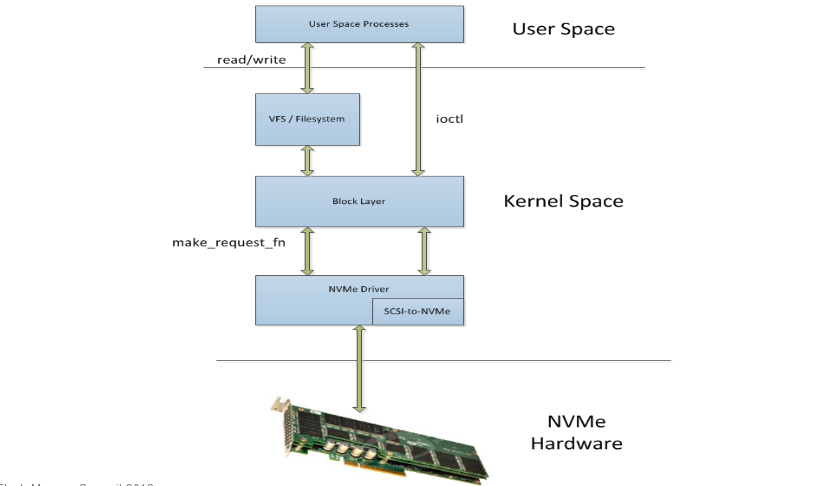

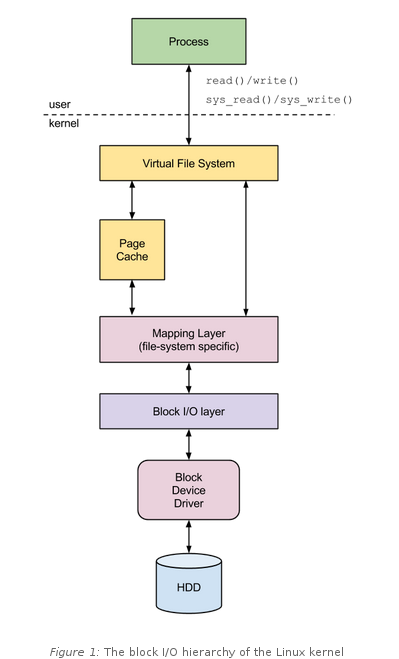

The figure below shows you Function flow of Kernel.

As you cas see figure. The layer procedure of flow is

filesystem -> block layer -> NVMe driver -> NVMe Device.

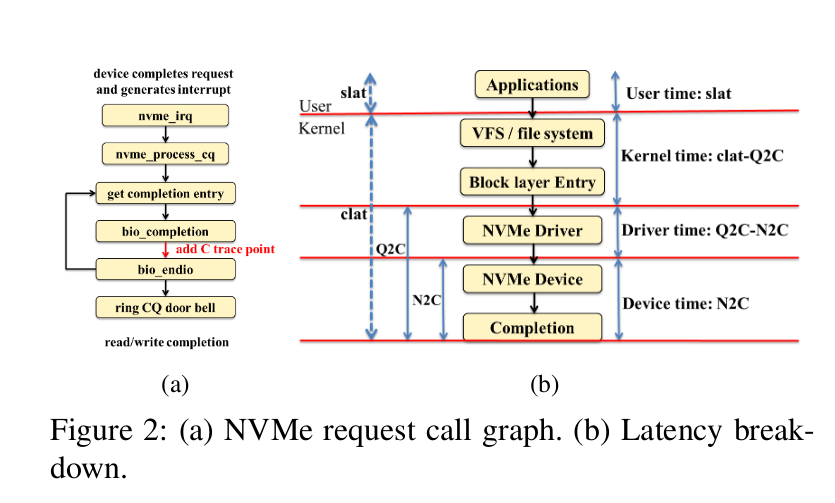

The Following is How to read/write with NVMe Device, i.e call graph of NVMe

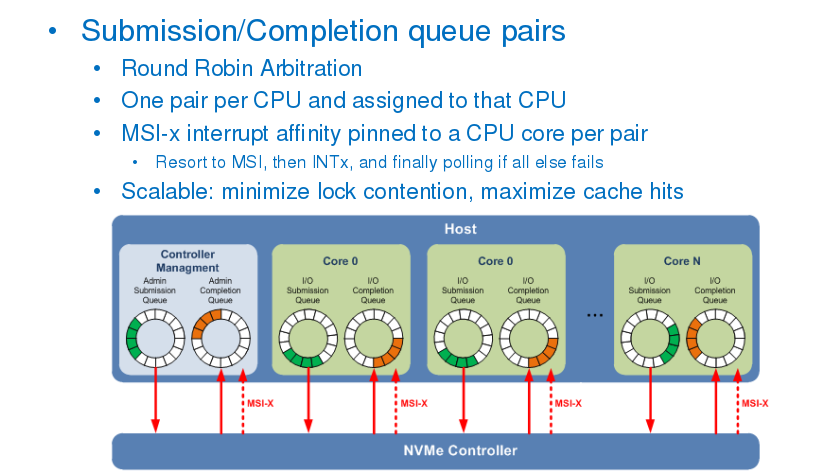

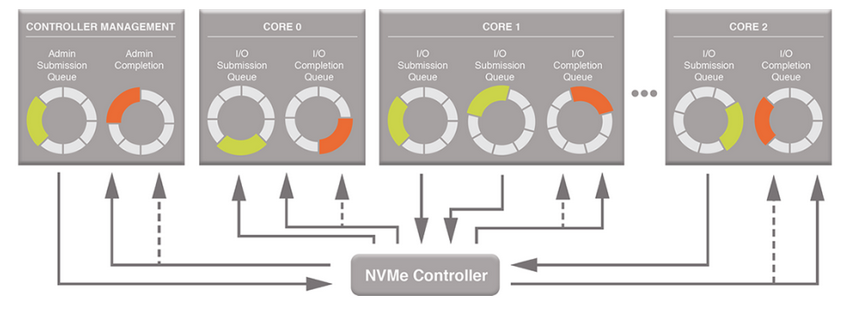

The following figure is NVMe Mulit Queue.

block device read / write

I read this basic article(block) of device driver.

there are no read or write operations provided in the block_device_operations sturture.

NVMe overview

I cite and refer to this site(nvmexpress) for article below.

NVMe is a scalable host controller interface.

linux kernel NVMe function call

If you need kernel source to just use this kernel broswer

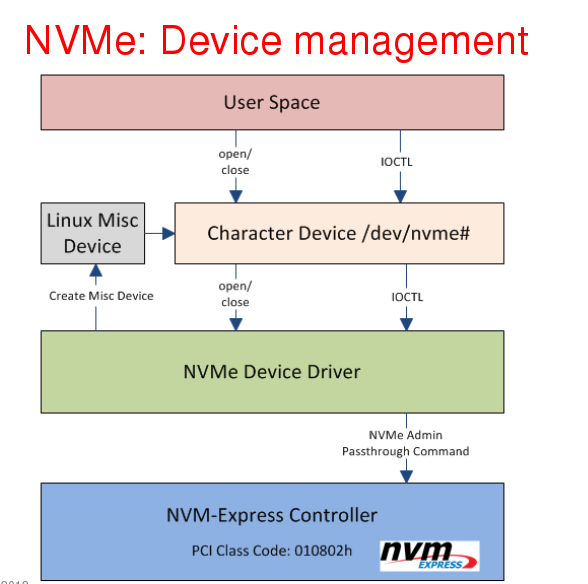

linux kernel 4.5 IOCTL function call procedure

[ 56.430104] hyun2 : function start of block_ioctl in linux/fs/block_dev.c

[ 56.430108] hyun2 : before blkdev_ioctl(bdev, mode, cmd, arg)

[ 56.430109] hyun2 : fucntion start of blkdev_ioctl in linux/block/ioctl.c

[ 56.430111] hyun2 : bdev(block_device-structure) : [??? 0x110300000-0xffff88020d4200f0 flags 0x1], mode(u64) : 393247, cmd(unsigned int) : 3225964097, arg(unsigned long) : 31125584

[ 56.430112] hyun2 : case default

[ 56.430113] hyun2 : before __blkdev_driver_ioctl(bdev, mode, cmd, arg) funtion call

[ 56.430113] hyun2 : function start of __blkdv_driver_ioctl in linux/block/ioctl.c

[ 56.430114] hyun2 : if there is disk->fops-> ioctl, start ioctl

[ 56.430115] hyun2 : bdev(block_device-structure) : [??? 0x110300000-0xffff88020d4200f0 flags 0x1], mode(u64) : 393247, cmd(unsigned int) : 3225964097, arg(unsigned long) : 31125584

[ 56.430116] hyun2 : function ioctl of nvme_ioctl test

[ 56.430116] hyun2 : case NVEM_IOCTL_ADMIN_CMD

[ 56.430118] hyun2 : bdev : 0xffff88020d420000, mode : 393247, cmd : 3225964097, arg : 31125584

block_ioctl

------> blkdev_ioctl

------> __blkdev_driver_ioctl

--------> nvme_ioctl, that' it

linux kernel 4.5 block layer read / write function call procedure

[ 538.738583] hyun2 : submit_bio in linux/block/blk-core.c file

[ 538.738585] hyun2 : before generic_make_request(bio) call in linux/block/blk-core.c file

[ 538.738587] hyun2 : function start of generic_make_request in linux/block/blk-core.c file

[ 538.738588] hyun2 : befor make_reuest_fn in linux/block/blk-core.c file

[ 538.738592] hyun2 : function start of generic_make_request in linux/block/blk-core.c file

[ 538.738593] hyun2 : after blk_queue_exit(q) in linux/block/blk-core.c file

briefly,

submit_bio function call

------> generic_make_request function call

------> make_request_fn of request queue

-------> next part ??? forward stage

I/O structure related to block device.

Kernel 4.5

42 /*

43 * main unit of I/O for the block layer and lower layers (ie drivers and

44 * stacking drivers)

45 */

46 struct bio {

47 struct bio *bi_next; /* request queue link */

48 struct block_device *bi_bdev;

49 unsigned int bi_flags; /* status, command, etc */

50 int bi_error;

51 unsigned long bi_rw; /* bottom bits READ/WRITE,

52 * top bits priority

53 */

54

55 struct bvec_iter bi_iter;

56

57 /* Number of segments in this BIO after

58 * physical address coalescing is performed.

59 */

60 unsigned int bi_phys_segments;

61

62 /*

63 * To keep track of the max segment size, we account for the

64 * sizes of the first and last mergeable segments in this bio.

65 */

66 unsigned int bi_seg_front_size;

67 unsigned int bi_seg_back_size;

68

69 atomic_t __bi_remaining;

70

71 bio_end_io_t *bi_end_io;

72

73 void *bi_private;

74 #ifdef CONFIG_BLK_CGROUP

75 /*

76 * Optional ioc and css associated with this bio. Put on bio

77 * release. Read comment on top of bio_associate_current().

78 */

79 struct io_context *bi_ioc;

80 struct cgroup_subsys_state *bi_css;

81 #endif

82 union {

83 #if defined(CONFIG_BLK_DEV_INTEGRITY)

84 struct bio_integrity_payload *bi_integrity; /* data integrity */

85 #endif

86 };

87

88 unsigned short bi_vcnt; /* how many bio_vec's */

89

90 /*

91 * Everything starting with bi_max_vecs will be preserved by bio_reset()

92 */

93

94 unsigned short bi_max_vecs; /* max bvl_vecs we can hold */

95

96 atomic_t __bi_cnt; /* pin count */

97

98 struct bio_vec *bi_io_vec; /* the actual vec list */

99

100 struct bio_set *bi_pool;

101

102 /*

103 * We can inline a number of vecs at the end of the bio, to avoid

104 * double allocations for a small number of bio_vecs. This member

105 * MUST obviously be kept at the very end of the bio.

106 */

107 struct bio_vec bi_inline_vecs[0];

108 };

109

110 #define BIO_RESET_BYTES offsetof(struct bio, bi_max_vecs)

111

112 /*

113 * bio flags

114 */

115 #define BIO_SEG_VALID 1 /* bi_phys_segments valid */

116 #define BIO_CLONED 2 /* doesn't own data */

117 #define BIO_BOUNCED 3 /* bio is a bounce bio */

118 #define BIO_USER_MAPPED 4 /* contains user pages */

119 #define BIO_NULL_MAPPED 5 /* contains invalid user pages */

120 #define BIO_QUIET 6 /* Make BIO Quiet */

121 #define BIO_CHAIN 7 /* chained bio, ->bi_remaining in effect */

122 #define BIO_REFFED 8 /* bio has elevated ->bi_cnt */

123

124 /*

125 * Flags starting here get preserved by bio_reset() - this includes

126 * BIO_POOL_IDX()

127 */

128 #define BIO_RESET_BITS 13

129 #define BIO_OWNS_VEC 13 /* bio_free() should free bvec */

kernel 4.5 block device

452 struct block_device {

453 dev_t bd_dev; /* not a kdev_t - it's a search key */

454 int bd_openers;

455 struct inode * bd_inode; /* will die */

456 struct super_block * bd_super;

457 struct mutex bd_mutex; /* open/close mutex */

458 struct list_head bd_inodes;

459 void * bd_claiming;

460 void * bd_holder;

461 int bd_holders;

462 bool bd_write_holder;

463 #ifdef CONFIG_SYSFS

464 struct list_head bd_holder_disks;

465 #endif

466 struct block_device * bd_contains;

467 unsigned bd_block_size;

468 struct hd_struct * bd_part;

469 /* number of times partitions within this device have been opened. */

470 unsigned bd_part_count;

471 int bd_invalidated;

472 struct gendisk * bd_disk;

473 struct request_queue * bd_queue;

474 struct list_head bd_list;

475 /*

476 * Private data. You must have bd_claim'ed the block_device

477 * to use this. NOTE: bd_claim allows an owner to claim

478 * the same device multiple times, the owner must take special

479 * care to not mess up bd_private for that case.

480 */

481 unsigned long bd_private;

482

483 /* The counter of freeze processes */

484 int bd_fsfreeze_count;

485 /* Mutex for freeze */

486 struct mutex bd_fsfreeze_mutex;

487 };

a little bit hint of blk-mq.

if you want to be comforable with linux searching.

just use kernel git log blk-mq summary of Thomas-krenn

Difference of nvme driver between kernel 3.18 and 4.5

difference happens becuae multi-queue system introduced in nvme driver of kernel 3.19

in other words, if you know about this more, click just this site(opw-linux-block-io-layer-part-4-multi)

the following cites and refers to the above site(opw-linux-block-io-layer-part-4-multi)

chapter 1.block I/O layer/ base conepts

The block I/O layer is the kernel subsytem in cahrge of managing input/output operationg performed on block devices. I think block devices are mainly exploited as storage devices.

the following show you a role a block layer of I/O request.

base about tracer

you see if what tracer is available in your linux.

command is :

cat /sys/kernel/debug/tracing/available_tracers

blk function_graph wakeup_dl wakeup_rt wakeup function nop

you can select tracers by echoing its name to current_tracer file. but, before that. I am going to see what tracer is already selected.

command is

cat /sys/kernel/debug/tracing/current_tracer

nop

this mean that nothing is selected as tracer.

so, now I am going to select tracer(function graph)

command is

[root@localhost kernel]# echo function_graph > /sys/kernel/debug/tracing/current_tracer

[root@localhost kernel]# cat /sys/kernel/debug/tracing/current_tracer

function_graph

At the moment, we can finally start tracing. Now first, let’s choose an interesting test case to trace. Let’s say we want to follow the path of a 4KB read I/O request performed on the sda.

pleas refer to this site(2014_06_01_archive)

meaning of poxis_memalign function

modern memory access hardware works with aligned data, that’ why you have to read this site(why-does-cpu-access-memory-on-a-word-boundary).

and this articl(unaligned-memory-access.txt) is good to learn about aligned data.

just NVMe and SSD layout

I refered to this site(SSDs-basics-and-details-on-performance)

this site show you basic function of ssd

NAND flash have much units, for example, cell, page, block, plane, die.

mutiple cells make up a page.

mutiple pages make up a block.

mutiple blocks make up a plane.

mutiple planes make up a die.

vblk stand for virtual block.

this means that blocks in different plane is one unit.

write unit(WU) is different from vblk.

vblk is erase unit.

slice is just chunk.

Bad block

There are two types of bad block - often dividec into “physical” and “logical” bad block or “hard” and “soft” bad block

if you know more,please click this site(howtogeek) and this site

procedure of a I/O queue pair.

Creation and deletion of Submission queue and associated completion queues need to be orderd correctly by host software.

Host software shall create the Completion Queue before creating any associated Submission Queue.

submission Queue may be created at any time after the associated Completion Queue is created.

Host software shall delete all associated Submission Queue priro to deleting a completion Queue.

to abort all commands submitted to the Submission Queue, host software should issue a Delete I/O Submission Queue Command for that queue.

SSD

쓰기 시 항상 sequential하게 wu 단위로 쓴다. 즉 만약 wu unit이 4k이고 128k 블럭에 쓰기 를 할시 쓰는 사람은 항상 기억을 했다가 순서에 맞게 쓰기 시작을 해야 한다. 주의 64개의 블럭 주소를 알아야 하고 0번째 부터 쓰기를 시작을 한다. 0 1 2 3 4 5 6 7 8 9 10 11 12 이런 식으로 말이다.

// sector 라는 것이 기본적인 값으로 있는 거고, 만약에 // sector block page 이런 것은 그냥 chunck라고 생각하고 진행을 하면 된다.