This paper,Centroid-based Text Summarization through Compositionality of Word Embeddings (Rossiello et al., MultiLing-WS 2017), is about text summarization based on cetroid, and then they experiment multi-documents and a multi-lingual sigle document.

This paper’s idea is using word embedding which is better on what words is similar on syntantic and semantic relationship rather than BOW(bag-of-words).

Representation from BOW is orthogonal when they don’t have word even though their meaning is the same.

BUT neural network language model is better rather than the relationship above. I mean inference using linear algebra of syntactic and semantic relationship.

Method for text summarization of this paper that I am introducing is extrating centroid words which is represntative for each document per document.

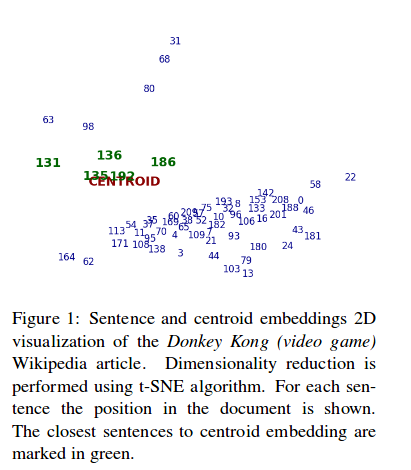

Then they are just comparing centroid vector to sentence vector in a document to select the representative summary of sentences in the document like this.

This paper argue word embedding is great on the effectivness of the compositionality to encode the semantic relation between words through vector dense representations.

I got idea about the effectiveness of compositionality of word embedding is better that encoding the semantic relations between words through vector dense representations.

This paper emphasizes about representation learning, when you summarize text, you could external knowledge. this means representation of word embedding from other corpus could be used to do something like NLP in the original corpus.

The paper: Centroid-based Text Summarization through Compositionality of Word Embeddings (Rossiello et al., MultiLing-WS 2017)